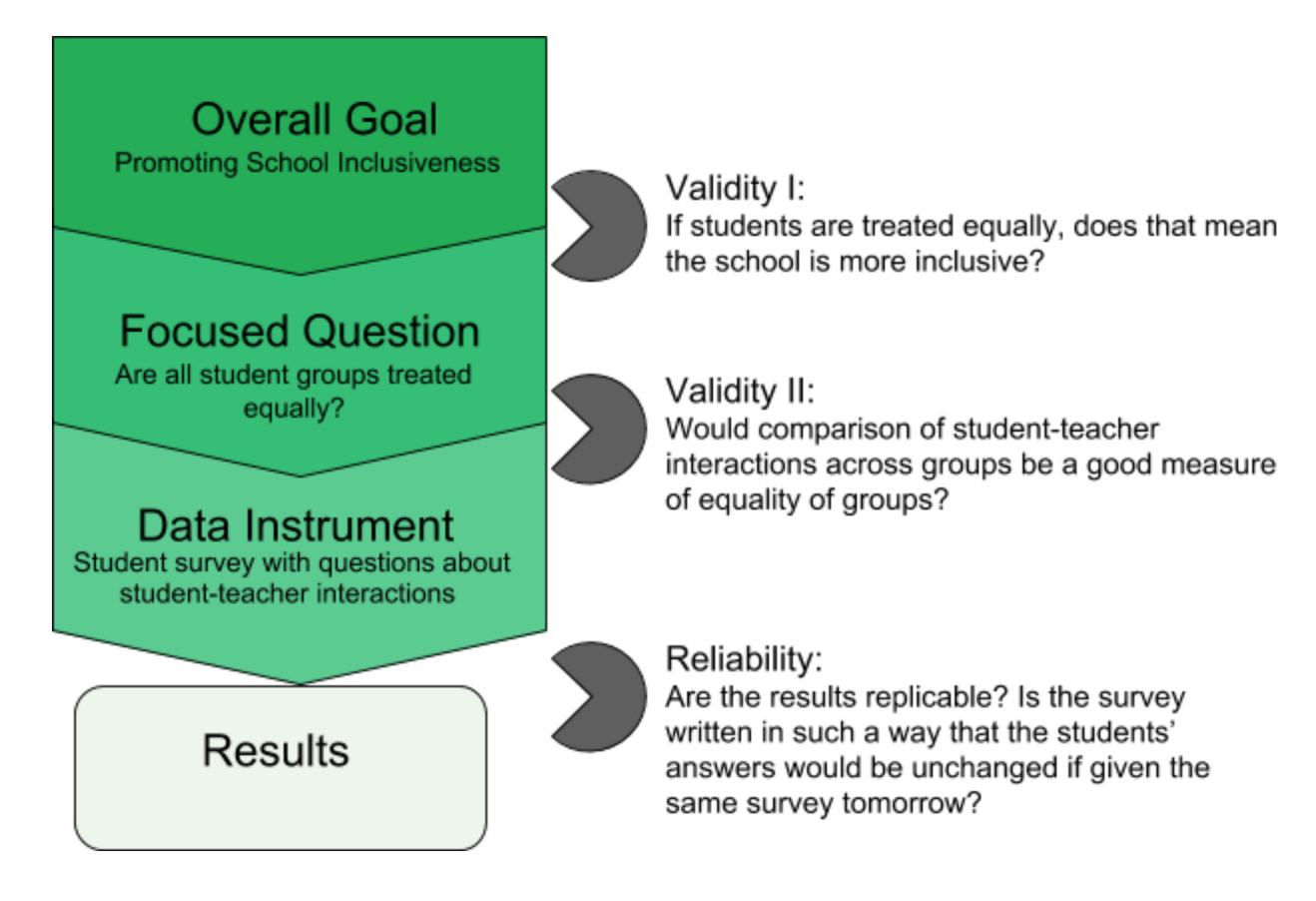

Content ValidityĪn assessment is said to have content validity when the criteria it’s measuring aligns with and adequately covers the content of the job. Also, the extent to which that content corresponds with success on the job is part of the process in determining how well the assessment demonstrates content validity. There are several ways for assessment companies to measure types of validity within tests, including content, criterion-related, and construct validity. In pre-employment assessments, this means predicting the performance of employees or identifying top talent. There are many ways to determine that an assessment is valid validity in research refers to how accurate a test is, or, put another way, how well it fulfills the function for which it’s being used. For example, if part of a pre-employment assessment is designed to measure math skills, test-takers should score equally as well on the first and second halves of that part of the test.Ī validity definition is a bit more complex because it’s more difficult to assess than reliability. Internal consistency focuses elsewhere to confirm that, yes, test items that are intended to be related are truly related.Īssessment companies typically measure internal consistency by correlating scores on the first half of the test to those on the second half. Since these scores should be measuring the same thing, the correlation should be 0.7 or higher. Since no test is going to be completely error-free, the correlation needs to be 0.7 or higher to be considered reliable. Researchers then measure the correlation coefficient-a statistical measure ranging on a scale from 0, no correlation, to 1, perfect correlation, to assess the reliability of the test. With this type, the same group of people is given the test twice (a few days or weeks apart) in order to spot differences in results.

To confirm a test’s reliability, assessment companies determine consistency over time with test-retest reliability. To determine the reliability of their tests, assessment companies pay close attention to two aspects of reliability in particular: re-test reliability and internal consistency measures.įind out why science-based hiring assessments are more helpful at identifying candidates’ potential than resumes, referrals, and interviews here. So, if you’re focusing on the reliability of a test, the question to ask is: are the results of the test consistent? If someone takes the test today, a week from now, and a month from now, will their results be the same? If the results are inconsistent, the test is not considered reliable. Here’s a good definition of reliability in a research context: if an assessment is reliable, the results will be very similar no matter when someone takes the test.

Of the two terms, assessment reliability is the simpler concept to explain and understand.

0 kommentar(er)

0 kommentar(er)